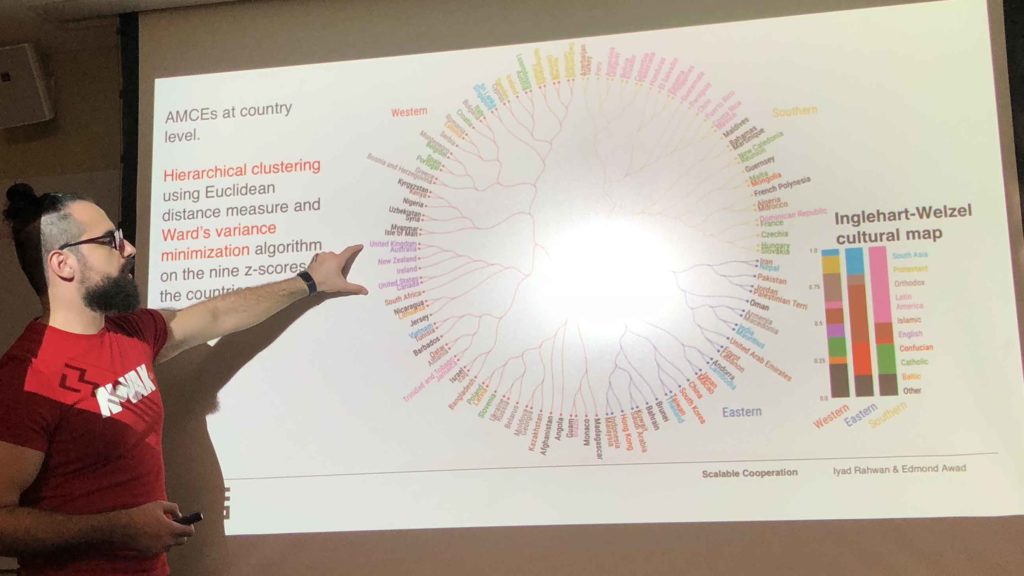

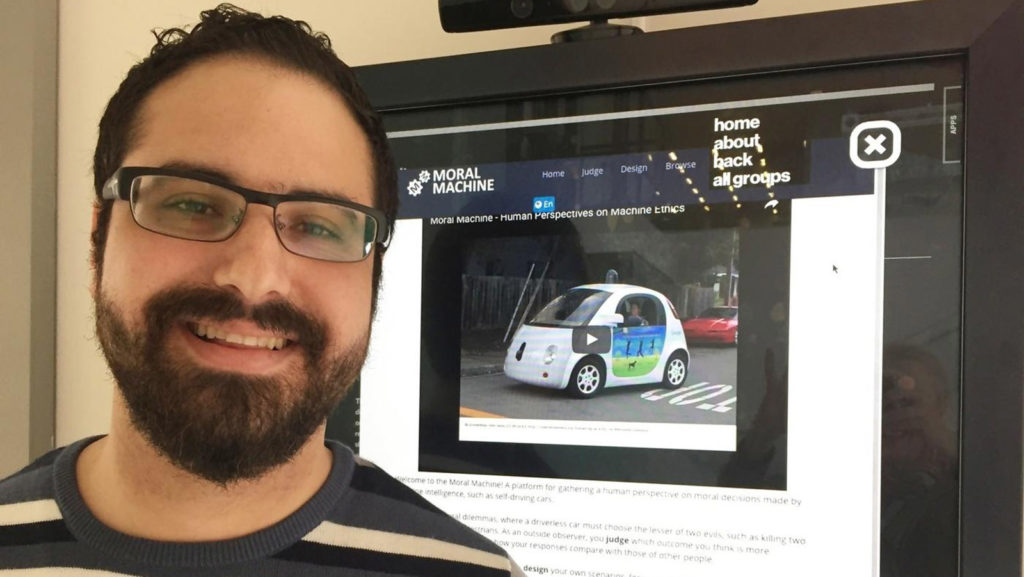

Awad and his MIT colleagues gave millions of people the self-driving-car trolley problem. In a recent visit to KSJ, he talked about what he learned. A self-driving car approaches a crosswalk and suddenly its brakes fail. It can avoid a person who’s crossing legally by swerving into the opposite lane, but then it will hit […]

A Lively Discussion, Even for KSJ: Edmond Awad on His ‘Moral Machine’

The MIT Media Lab scholar describes a video game in which driverless cars develop a conscience. Knight Science fellows aren’t so sure about the rules.

"

" "

"